I recently discovered SerpApi and thought it would be fun to build a simple application using search results pulled from SerpApi. If you don't know about SerpApi, they're experts in scraping practically every search engine in existence and packaging the results in an easy to use API.

This gives businesses a powerful tool for monitoring search results that can help optimize their SEO, track news, or train AI models. Ultimately, the only limitation is one's own creativity.

My idea was to wire up SerpApi with OpenAI to get ChatGPT to analyze search results based on user-generated prompts. Here's how I built it.

Initial Setup

rails new search-results-ai-analyzer

cd search-results-ai-analyzer

rails db:migrateI'm also going to install Tailwind CSS to make it a slightly easier on the eyes. I suggest following the installation steps on the Tailwind website.

Check that everything is working by firing up the server. With Tailwind installed, we start the server by running bin/dev.

Next, we'll create a SearchAnalysis model using Rails' scaffold generator to save us some time. The model needs the following fields:

- query: what we want to search

- engine: enum of which search engine to use

- prompt: what we want to ask ChatGPT to do

- search_results: the results from SerpApi

- chat_response: the response from ChatGPT

- status: enum of where we are in the process (e.g. pending, failed, complete)

Run the scaffold generator and migrate the database with the commands below.

rails g scaffold SearchAnalysis query:text engine:integer prompt:text search_results:text chat_response:text status:integerThe status field should have a default value of 0. This must be manually set in the migration file before running the migration. 0 will be defined as pending_search in the model file.

# db/migrate/20240122133152_create_search_analyses.rb

class CreateSearchAnalyses < ActiveRecord::Migration[7.0]

def change

create_table :search_analyses do |t|

t.text :query

t.integer :engine

t.text :prompt

t.text :search_results

t.text :chat_response

t.integer :status, default: 0

t.timestamps

end

end

endNow we run our migration.

rails db:migrateAdd our enum values and some very basic validations for user submitted data.

# app/models/search_analysis.rb

class SearchAnalysis < ApplicationRecord

enum :engine, { google: 0, bing: 1, duckduckgo: 2, yahoo: 3, yandex: 4, baidu: 5 }

enum :status, { pending_search: 0, pending_chat: 1, complete: 2, failed: 3 }

validates :query, presence: true

validates :engine, presence: true

validates :prompt, presence: true

endChange the root path to map to search_analyses#index in your config/routes.rb file.

# config/routes.rb

Rails.application.routes.draw do

resources :search_analyses

root "search_analyses#index"

endWe don't want our forms to ever accept user submitted data for the status, search_results and chat_response fields so we'll remove those from our strong params method.

# app/controllers/search_analyses_controller.rb

# Only allow a list of trusted parameters through.

def search_analysis_params

params.require(:search_analysis).permit(:query, :engine, :prompt)

endWe should also remove those fields from our form so the user doesn't even see them.

While we're editing our form, let's also improve the field labels to be more clear and also have the search engine field use a select dropdown.

Your form should look like this:

# app/views/search_analyses/_form.html.erb

<%= form_with(model: search_analysis, class: "contents") do |form| %>

<% if search_analysis.errors.any? %>

<div id="error_explanation" class="bg-red-50 text-red-500 px-3 py-2 font-medium rounded-lg mt-3">

<h2><%= pluralize(search_analysis.errors.count, "error") %> prohibited this search_analysis from being saved:</h2>

<ul>

<% search_analysis.errors.each do |error| %>

<li><%= error.full_message %></li>

<% end %>

</ul>

</div>

<% end %>

<div class="my-5">

<%= form.label :query, "Search Query" %>

<%= form.text_field :query, rows: 4, class: "block shadow rounded-md border border-gray-200 outline-none px-3 py-2 mt-2 w-full" %>

</div>

<div class="my-5">

<%= form.label :engine, "Search Engine" %>

<%= form.select(:engine, ['google', 'bing', 'duckduckgo', 'yahoo', 'yandex', 'baidu'], { :class => "block shadow rounded-md border border-gray-200 outline-none px-3 py-2 mt-2 w-full" }) %>

</div>

<div class="my-5">

<%= form.label :prompt, "Chat Prompt" %>

<%= form.text_area :prompt, rows: 3, class: "block shadow rounded-md border border-gray-200 outline-none px-3 py-2 mt-2 w-full" %>

</div>

<div class="inline">

<%= form.submit class: "rounded-lg py-3 px-5 bg-blue-600 text-white inline-block font-medium cursor-pointer" %>

</div>

<% end %>As an optional but recommended step, you can remove the following things the scaffold generated that we don't need.

- The edit and update actions in the SearchAnalyses controller.

- The entire edit view file

views/search_analyses/edit.html.erb - Remove the "Edit this search analysis" buttons from

views/search_analyses/_search_analysis.html.erbandviews/search_analyses/show.html.erb

Give your form a quick test before moving on.

Connecting to OpenAI/ChatGPT

Now we'll wire up our app to talk to OpenAI's API. If you don't have an OpenAI API account yet, create one here first and then find the API Keys page. Create a key and store it somewhere safe. OpenAI will only show you the key once.

Hopefully OpenAI will give you some free credit to play around with the API. If not, you'll need to add at least $5 credit on the Billing page. $5 will go very far for just hobby usage.

We'll be using the ruby-openai gem. It's not an official library made by OpenAI, but it more than does the job and OpenAI recommends it in their API documentation.

Add gem "ruby-openai" to your Gemfile and run bundle install.

We'll also want to create an initializer file to configure the gem with our OpenAI API key.

touch config/initializers/openai.rbAdd the following to the file.

# config/initializers/openai.rb

OpenAI.configure do |config|

config.access_token = ENV.fetch("OPENAI_ACCESS_TOKEN")

endWe'll also need to set this environment variable in our command line to get this to work.

export OPENAI_ACCESS_TOKEN=your_api_key_hereWe can test all this is working by opening up the rails console. You should be able to run client = OpenAI::Client.new to create a client and then client.models.list to get a list of available AI models.

user@host:~/search-results-ai-analyzer$ rails c

Loading development environment (Rails 7.0.8)

irb(main):001> client = OpenAI::Client.new

=>

#<OpenAI::Client:0x00007fa6404fa6f8

...

irb(main):002> client.models.list

=>

{"object"=>"list",

"data"=>

[{"id"=>"curie-search-query",

"object"=>"model",

"created"=>1651172509,

"owned_by"=>"openai-dev"},

{"id"=>"babbage-search-query",

"object"=>"model",

"created"=>1651172509,

"owned_by"=>"openai-dev"},

{"id"=>"dall-e-3",

"object"=>"model",

"created"=>1698785189,

"owned_by"=>"system"},

{"id"=>"babbage-search-document",

"object"=>"model",

"created"=>1651172510,

"owned_by"=>"openai-dev"},

{"id"=>"dall-e-2",

...If the above fails, the most likely reason is because of an issue configuring your API key. Ensure your environment variable is set and the initializer file references it correctly.

We can hit the OpenAI API technically from nearly anywhere in our Rails app, but I prefer dropping this logic into a service. We'll create two directories and two files.

mkdir app/services

mkdir app/services/openai_services

touch app/services/openai_services.rb

touch app/services/openai_services/chat_service.rbIn app/services/openai_services.rb, we can initialize the module with the following code.

# app/services/openai_services.rb

module OpenAIServices

endTo be able to reference this module as OpenAIServices, we need to make an addition to our config/initializers/inflections.rb file. Otherwise, our Rails app will be expecting OpenaiServices because the file name is openai_services.rb and Rails doesn't know the ai part should read as AI. We'll encounter the same problem with SerpApi. We'll fix both with the below code.

# config/initializers/inflections.rb

ActiveSupport::Inflector.inflections(:en) do |inflect|

inflect.acronym "OpenAI"

inflect.acronym "SerpApi"

endOpen up our ChatService file and let's get to work on hitting the OpenAI API. We'll start by creating a ChatService class that is initialized with a search_analysis object. Our SearchAnalysis model includes everything we might need for our AI prompt.

# app/services/openai_services/chat_service.rb

module OpenAIServices

class ChatService

attr_reader :search_analysis

def initialize(search_analysis)

@search_analysis = search_analysis

end

end

endNext, we'll create a call method that will handle the logic of sending a message to the Chat API.

# app/services/openai_services/chat_service.rb

module OpenAIServices

class ChatService

attr_reader :search_analysis

def initialize(search_analysis)

@search_analysis = search_analysis

end

def call

client = OpenAI::Client.new

response = client.chat(

parameters: {

model: "gpt-3.5-turbo",

messages: [{ role: "user", content: @search_analysis.prompt}],

temperature: 0,

})

chat_response = response.dig("choices", 0, "message", "content")

end

end

endWe ought to test this connection in rails console before continuing.

user@host:~/search-results-ai-analyzer$ rails c

Loading development environment (Rails 7.0.8)

irb(main):001> search_analysis = SearchAnalysis.new(prompt: "Hey, can you hea

r me?")

=>

#<SearchAnalysis:0x00007f6684c5a7f0

...

irb(main):002> OpenAIServices::ChatService.new(search_analysis).call

=> "Yes, I can hear you. How can I assist you today?"Great, we got a working response back from ChatGPT. Now we'll improve the logic in our call method to follow typical Rails service object conventions of returning an OpenStruct.

# app/services/openai_services/chat_service.rb

module OpenAIServices

class ChatService

attr_reader :search_analysis

def initialize(search_analysis)

@search_analysis = search_analysis

end

def call

begin

client = OpenAI::Client.new

response = client.chat(

parameters: {

model: "gpt-3.5-turbo",

messages: [{ role: "user", content: @search_analysis.prompt}],

temperature: 0,

})

chat_response = response.dig("choices", 0, "message", "content")

rescue StandardError => e

OpenStruct.new(success?: false, error: e)

else

OpenStruct.new({success?: true, openai_response: chat_response})

end

end

end

endThe prompt we're sending to ChatGPT is currently just what the user put in the form, but we actually need to append some additional context to their prompt. Remember this is a search results analyzer so we'll need to include the search results in the prompt. It'd also be a good idea to tell ChatGPT what our search query was. Let's add a private method called generate_prompt to handle this logic.

# app/services/openai_services/chat_service.rb

private

def generate_prompt

<<~PROMPT

#{@search_analysis.prompt}

My search query: #{@search_analysis.query}"

The search results were:

#{@search_analysis.search_results}

PROMPT

endWe also need to update the content value in the chat parameters to call our method messages: [{ role: "user", content: generate_prompt}].

# app/services/openai_services/chat_service.rb

def call

begin

client = OpenAI::Client.new

response = client.chat(

parameters: {

model: "gpt-3.5-turbo",

messages: [{ role: "user", content: generate_prompt}],

temperature: 0,

})

chat_response = response.dig("choices", 0, "message", "content")

rescue StandardError => e

OpenStruct.new(success?: false, error: e)

else

OpenStruct.new({success?: true, results: chat_response})

end

endWe'll do our rails console check again.

user@host:~/search-results-ai-analyzer$ rails cLoading development environment (Rails 7.0.8)

irb(main):001* search_analysis = SearchAnalysis.new(

irb(main):002* query: "italian city with best food",

irb(main):003* prompt: "Analyze the search results below and tell me which city I should visit if I'm a foodie. Tell me why as well",

irb(main):004* search_results: "Surveys confirm Bologna as the food capital of Italy thanks to its wonderful food products like mortadella."

irb(main):005> )

=>

#<SearchAnalysis:0x00007f537cd93c40

...

irb(main):006> OpenAIServices::ChatService.new(search_analysis).call

=> #<OpenStruct success?=true, results="Based on the search results, it is suggested that you should visit Bologna if you are a foodie. Bologna is known as the food capital of Italy, and it offers wonderful food products like mortadella. This implies that Bologna has a rich culinary tradition and is renowned for its delicious Italian cuisine. As a foodie, you can expect to indulge in a wide variety of authentic and high-quality Italian dishes in Bologna, making it an ideal destination for your culinary exploration.">This all looks good. We'll leave this for now and move on to our SerpApi connection.

Connecting to SerpApi

First, create a SerpApi account and then locate your API key.

SerpApi's free plan grants 100 searches per month. For our hobby purposes, this will be enough, but we should be judicious with how we use them. Fortunately, SerpApi caches recent searches so if you re-run the same search several times over the course of an afternoon, you'll get cached results that don't consume your free search allowance.

It's also worth noting your search history is stored in your dashboard with the complete JSON response as well as a UI rendering of what your search results would have looked like in the browser.

From what I can tell from job postings, SerpApi uses Ruby on Rails as a major part of their tech stack. They must be Rubyists at heart so course they have an official Ruby gem that we'll be using.

Add gem "google_search_results" to your Gemfile and run bundle install.

The following steps we'll be very similar to what we did for the OpenAI connection. First, we'll create an initializer to set our API key.

touch config/initializers/serpapi.rbSet the key using an environment variable again.

export SERPAPI_API_KEY=your_api_key_hereAnd reference that environment variable in the initializer. We'll use the generic SerpApi class SerpApiSearch which allows us to search any engine as long as we specify the engine in the request.

If you only wanted to use a particular search engine, you can set the key on classes like GoogleSearch, DuckDuckgoSearch, YandexSearch, etc. By doing this, you can omit the engine in the API request since the engine is already set via the class. See the docs for more on this.

# config/initializers/serpapi.rb

SerpApiSearch.api_key = ENV.fetch("SERPAPI_API_KEY")We'll test our setup in rails console.

user@host:~/search-results-ai-analyzer$ rails c

Loading development environment (Rails 7.0.8)

irb(main):001* query = {

irb(main):002* q: "italian city with best food",

irb(main):003* engine: "google"

irb(main):004> }

=> {:q=>"italian city with best food", :engine=>"google"}

irb(main):005> search = SerpApiSearch.new(query)

irb(main):006> organic_results = search.get_hash[:organic_results]

=>

[{:position=>1,

...

irb(main):007> organic_results

=>

[{:position=>1,

:title=>"9 Best Food Cities in Italy",

:link=>"https://www.celebritycruises.com/blog/best-food-cities-in-italy",

:redirect_link=>

"https://www.google.comhttps://www.celebritycruises.com/blog/best-food-cities-in-italy",

:displayed_link=>

"https://www.celebritycruises.com › blog › best-food-ci...",

:thumbnail=>

"https://serpapi.com/searches/65af9352fdca3e33d38b4de0/images/5c56f82085de25fb7b377b8923ee596b106bddcb2a9676ce6af1dc1ce6a4662b.jpeg",

:favicon=>

"https://serpapi.com/searches/65af9352fdca3e33d38b4de0/images/5c56f82085de25fb7b377b8923ee596b5a12458ea0949e9ab510eb6af83484f2.png",

:date=>"Nov 29, 2023",

:snippet=>

"9 Best Food Cities in Italy · Bologna · Palermo · Rome · Florence · Cagliari · Sorrento · Genoa · Parma. Street view of Parma. Parma. Often ...",

:snippet_highlighted_words=>["Best Food Cities", "Italy"],

...This all looks good. Now onto our service object. Let's create the module and directory.

mkdir app/services/serpapi_services

touch app/services/serpapi_services.rb

touch app/services/serpapi_services/search_service.rb# app/services/serpapi_services.rb

module SerpApiServices

endOur initial setup looks exactly the same as our ChatService where we initialize an instance of the SearchService with a search_analysis.

# app/services/serpapi_services/search_service.rb

module SerpApiServices

class SearchService

attr_reader :search_analysis

def initialize(search_analysis)

@search_analysis = search_analysis

end

end

endWe'll then create a basic call method.

# app/services/serpapi_services/search_service.rb

module SerpApiServices

class SearchService

attr_reader :search_analysis

def initialize(search_analysis)

@search_analysis = search_analysis

end

def call

search = SerpApiSearch.new(

q: @search_analysis.query,

engine: @search_analysis.engine

)

search.get_hash[:organic_results]

end

end

endWe could theoretically take the whole JSON response from SerpApi and send it to ChatGPT for analysis. I've tested this and ChatGPT understands the content of the JSON well enough to analyze it. Trouble is the JSON body is very verbose with a lot of characters there are irrelevant to ChatGPT. This is an issue because OpenAI charges more for prompts with a lot of text. We'll need to cut down how much of the response body we use.

We'll simplify the search results to only use organic results and only use page titles, snippets, and links. We'll also save just the content and none of the JSON syntax. This will make it more readable for both humans and ChatGPT as well as keep the character count down.

We'll handle this logic in a private textify_results method. I'll also add the OpenStruct responses to our call method.

# app/services/serpapi_services/search_service.rb

private

def textify_results(results)

concatenated_text = ""

results.each do |result|

concatenated_text += <<~RESULT

Title: #{result[:title]}

Link: #{result[:link]}

Snippet: #{result[:snippet]}

RESULT

end

return concatenated_text

endTest it in rails console.

user@host:~/search-results-ai-analyzer$ rails c

Loading development environment (Rails 7.0.8)

irb(main):001* search_analysis = SearchAnalysis.new(

irb(main):002* query: "italian city with best food",

irb(main):003* engine: "google"

irb(main):004> )

irb(main):005> test_search = SerpApiServices::SearchService.new(search_analysis).call

=> #<OpenStruct success?=true, results="Title: 9 Best Food Cities in Ita...

irb(main):006> test_search.results

=> "Title: 9 Best Food Cities in Italy\nLink: https://www.celebritycruises.com/blog/best-food-cities-in-italy\nSnippet: 9 Best Food Cities in Italy · Bologna · Palermo · Rome · Florence · Cagliari · Sorrento · Genoa · Parma. Street view of Parma. Parma. Often ...\n\nTitle: 10 of the best Italian cities for food - Times Travel\nLink: https://www.thetimes.co.uk/travel/destinations/europe/italy/italy-food-guide\nSnippet: Italy's best cities for food · 1. Florence · 2. Venice · 3. Naples · 4. Rome · 5. Milan · 6. Bologna · 7. Genoa · 8. Parma.\n\n

...All good here. Onto the next task.

Linking It All Together

We finally have both APIs wired up and a model ready to go. Now we need to link all these pieces together.

We'll start in our SearchAnalysis model and create methods that will call our API service objects.

First, a simplerun_search method. This will call our SerpApi service and if the response is successful, it will store the results in search_results.

If it fails, we'll record the error message and mark the record as failed. Ideally, the error message would be cleaned up to present something user friendly, but that can be left aside for now.

# app/models/search_analysis.rb

def run_search

# We only run the search if the status is "pending_search"

return unless self.status == "pending_search"

search_response = SerpApiServices::SearchService.new(self).call

if search_response.success?

self.search_results = search_response.results

self.status = "pending_chat"

else

self.search_results = "Failed due to error: #{search_response.error.to_s}"

self.status = "failed"

end

self.save

endA quick test in rails console confirms this works and our search results get saved.

user@host:~/search-results-ai-analyzer$ rails c

Loading development environment (Rails 7.0.8)

irb(main):001* search_analysis = SearchAnalysis.new(

irb(main):002* query: "italian city with best food",

irb(main):003* engine: "google",

irb(main):004> prompt: "Analyze the search results below and tell me which city I should visit if I'm a foodie. Tell me why as well.")

=>

#<SearchAnalysis:0x00007fbc9744f470

...

irb(main):005> search_analysis.run_search

TRANSACTION (0.1ms) begin transaction

SearchAnalysis Create (0.4ms) INSERT INTO "search_analyses" ("query", "engine", "prompt", "search_results", "chat_response", "status", "created_at", "updated_at") VALUES (?, ?, ?, ?, ?, ?, ?, ?) [["query", "italian city with best food"], ["engine", 0], ["prompt", "Based solely on these search results, where should I go in Italy for a delicious vacation?"], ["search_results", "Title: 9 Best Food Cities in Italy\nLink: https://www.celebritycruises.com/blog/best-food-cities-in-italy\nSnippet: 9 Best Food Cities in Italy · Bologna · Palermo · Rome · Florence · Cagliari · Sorrento · Genoa · Parma. Street view of Parma. Parma. Often ...\n\n...

TRANSACTION (3.4ms) commit transaction

=> trueNext, we'll create a run_chat method. The logic will effectively be identical to the run_search method.

# app/models/search_analysis.rb

def run_chat

# We only run the chat if the status is "pending_chat"

return unless self.status == "pending_chat"

chat_response = OpenAIServices::ChatService.new(self).call

if chat_response.success?

self.chat_response = chat_response.results

self.status = "complete"

else

self.chat_response = "Failed due to error: #{chat_response.error.to_s}"

self.status = "failed"

end

self.save

endAnd now the test.

user@host:~/search-results-ai-analyzer$ rails c

Loading development environment (Rails 7.0.8)

irb(main):001> search_analysis = SearchAnalysis.last

SearchAnalysis Load (0.2ms) SELECT "search_analyses".* FROM "search_analyses" ORDER BY "search_analyses"."id" DESC LIMIT ? [["LIMIT", 1]]

=>

#<SearchAnalysis:0x00007f9d61599d30

...

irb(main):002> search_analysis.run_chat

TRANSACTION (0.1ms) begin transaction

SearchAnalysis Update (0.4ms) UPDATE "search_analyses" SET "chat_response" = ?, "updated_at" = ? WHERE "search_analyses"."id" = ? [["chat_response", "Based on the search results, the city you should visit if you're a foodie is Bologna. Bologna consistently appears in multiple search results and is mentioned in titles such as \"9 Best Food Cities in Italy,\" \"10 of the best Italian cities for food,\" and \"Our Favorite Food Cities In Italy.\" Bologna is known for its rich culinary traditions, including dishes like tortellini, ragu, and mortadella. It is also praised for its local markets, food festivals, and vibrant food scene. Therefore, if you want to experience authentic Italian cuisine and immerse yourself in a city with a strong food culture, Bologna is the ideal choice."], ["updated_at", "2024-01-24 12:14:40.214658"], ["id", 6]]

TRANSACTION (2.8ms) commit transaction

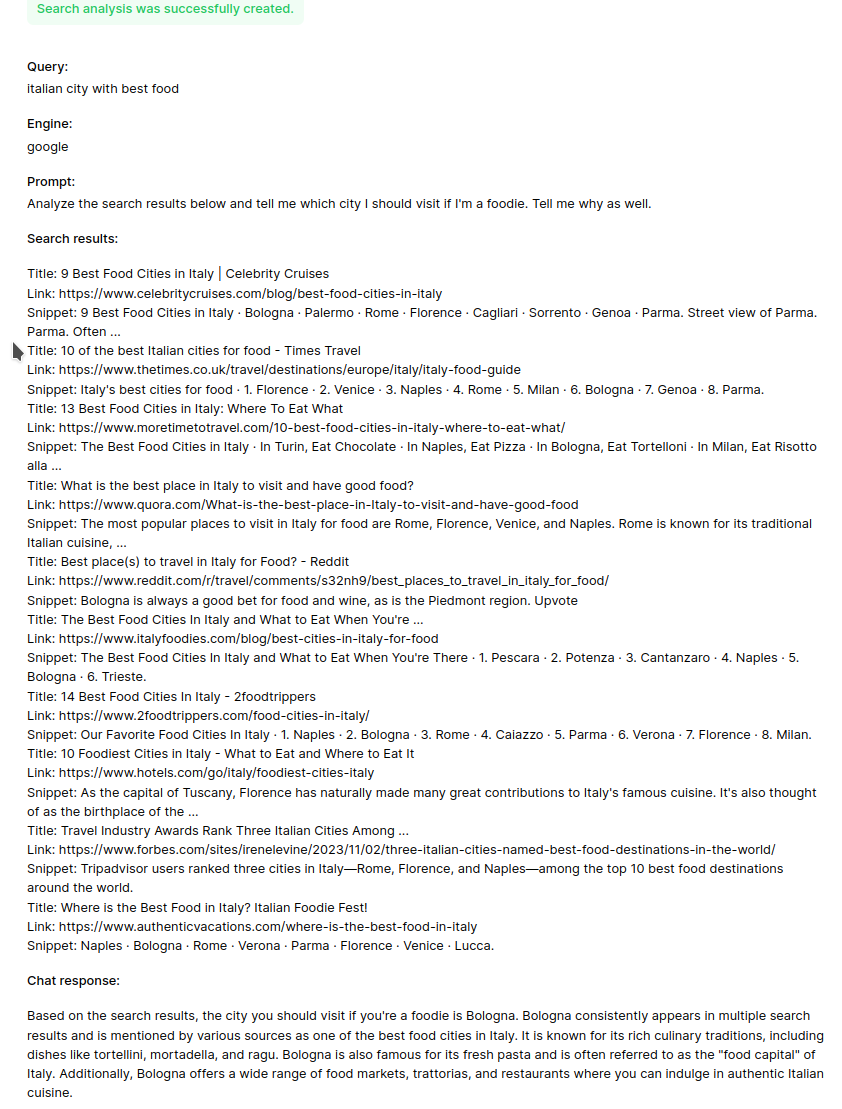

=> trueBeautiful. We got a helpful response from ChatGPT telling us we should go to Bologna for our Italian foodie vacation.

Thanks to our scaffolding, we know our views will already display all this information to the user. We also already tested the create action of our controller.

We could call the search and chat services from our controller, but I think a cleaner way would be to handle all this in the model. We'll always save a search analysis request with valid data so we don't need the controller to worry about any of that. Instead, we can use our run_search and run_chat methods as callbacks that fire after the record is created.

# app/models/search_analysis.rb

class SearchAnalysis < ApplicationRecord

enum :engine, { google: 0, bing: 1, duckduckgo: 2, yahoo: 3, yandex: 4, baidu: 5 }

enum :status, { pending_search: 0, pending_chat: 1, complete: 2, failed: 3 }

validates :query, presence: true

validates :engine, presence: true

validates :prompt, presence: true

after_create :run_search, :run_chat

...

endOne potential concern here would be the risk of the search failing and then our chat would also fail (or not be useful) because we wouldn't have any search results to send ChatGPT. Fortunately, we already handled that when we set it so run_chat would fully execute only if the status was pending_chat which is only set if the run_search method was successful.

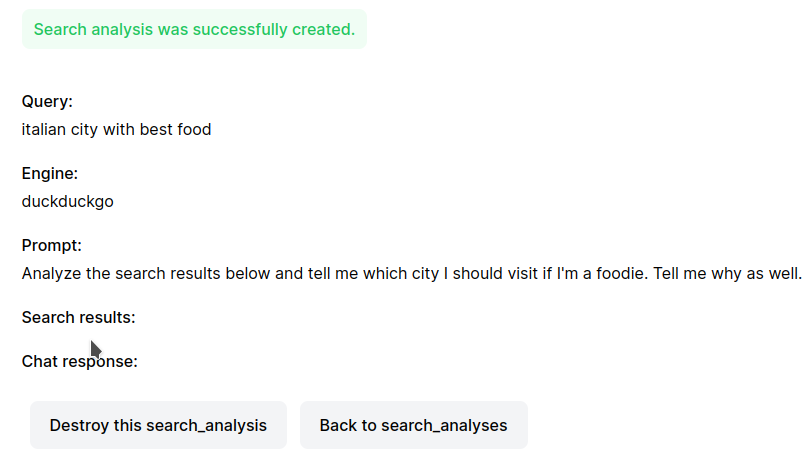

Now we can try requesting a search analysis through our form. Rails will automatically fire our methods to call SerpApi and ChatGPT. The form submission will take a few seconds to process because it has to make two API calls. With a little patience we finally see that it all works.

Rails renders the search results and chat response by default without any newlines so it's worth adding simple_format to these.

# app/views/_search_analysis.html.erb

<p class="my-5">

<strong class="block font-medium mb-1">Search results:</strong>

<%= simple_format(search_analysis.search_results) %>

</p>

<p class="my-5">

<strong class="block font-medium mb-1">Chat response:</strong>

<%= simple_format(search_analysis.chat_response) %>

</p>

It's rather ugly, but everything is now working. Perhaps we can pass this off to some designers to pretty up.

Areas to Improve

What we have is a good starting point, but it could be a lot better. Some possible improvements:

* Process API calls in a background job.

* Display user friendly error messages in case calls to SerpApi or OpenAI fail.

* Pretty up the UI.

* Allows users to store prompts for future use.

* Add support for all the other search engines SerpApi supports like Google News, Finance, Maps, Jobs, etc.

* Allow users to send additional chat messages to ChatGPT and present this in a chatbox.

* Re-try failed search analyses.Source Code

The full source code of this application is available on Github.